No, the computer will never work like a human brain, but it may inspire the computer industry to overcome the current bottleneck towards even faster and more efficient computers Dr. Mae-Wan Ho

Scientists are attempting to simulate the human brain with a supercomputer [1]. The Human Brain Project (HBP) is Europe’s own Big Science costing €1 billion over the next ten years; and has been funded by the European Commission in January 2013. It is a step-up of the Blue Brain Project based in Switzerland [2], led by neuroscientist Henry Markram at Ecole Polytechnique Fédérale de Lausanne, which claims to have created a virtual computer replica of part of the rat’s neocortex, a region of the brain that controls conscious thought, reasoning, and movement. The HBP has the ambitious goal of mapping all of the ~100 billion neurons in the human brain and their 100 trillion connections (synapses) [3], to “realistically simulate the human brain”, so it will tell us “how the human mind works”, how to “cure brain disease”, and inspire new “neuromorphic” computers, among other things.

Not to be outdone, the New York Times revealed that the Obama administration is to unveil the Brain Activity Map (BAM) project to [4] “ultimately greatly expand our understanding of the healthy and diseased human brain,” which many have compared to the Human Genome Project, with a demand for research funding on the same scale, at $300 million a year for ten years. Other scientists have criticised the project for lacking clear goals, and swallowing up funds just when times are hard that could have gone to support many smaller studies [5].

The BHP and BAM together have the makings of a science-fiction dystopia in which the supercomputer human brain tries to take over the world, assuming the project succeeds in uploading a human brain to the electronic computer that’s totally unencumbered by human emotions like love and empathy – all part of the real human’s brain wet chemistry that’s inseparable from the rest of the body (see [6] Quantum Coherence and Conscious Experience, I-SIS scientific publication; [7] Living Rainbow H2O, ISIS Publication) - and therefore will think nothing of exterminating real human beings with their stupid human failings.

The more likely outcome is an Exaflop! a spectacular failure of galactic dimensions. (The end goal in the computer industry is to build a supercomputer that processes 1018 operations per second - also an Exaflop - and I shall deal with that later.) By now, we have come to expect Big Science projects to fail spectacularly.

The Human Genome Project promised to tell us how to make a human being, free us from disease and other undesirable human conditions [8] (Ten years of the Human Genome, SiS 48). Instead, despite technological advances that enable geneticists to trawl through entire genomes at great rates, they have not even succeeded in identifying genes that predispose us to common chronic diseases [9] (Mystery of Missing Heritability Solved? SiS 53), and found [10] No Genes for Intelligence (SiS 53) either.

Similarly, the Hadron Collider is widely touted to have confirmed the Standard Model of the physical universe by having found a Higgs boson. Even if that is true, the Standard Model accounts for no more than 5 % of the universe, the rest being ‘dark energy and dark matter’ of which little can be said [11]. Results from the Hadron Collider have so far failed to validate supersymmetry theories that are needed to patch up the Standard Model, such as accounting for dark matter [12]; leaving physicists in states ranging from despair and despondency to defensiveness and defiance. Surely, they should be at least a little bit humble and contrite, having already wasted billions of taxpayer’s money.

It is very unlikely that the Human Brain Project will succeed in telling us how the brain works, let alone the mind. First of all, whose human brain is going to be simulated? At this juncture, we already know that the brains of even identical twins are wired differently because of individual experience [10]. And yet, individuals whose neurons are wired differently nevertheless function normally and understand one another without any difficulty.

Second, there is no evidence that distinct memories are stored in synapses of specific neurons, however hard brain scientists have tried to look for them there. Instead, memory is most likely stored holographically [6, 13], i.e., delocalized over the whole brain (probably over the body and in the ambient field of a quantum holographic universe [6]). Memory is able to survive large brain lesions, and it takes almost no time at all for the human brain to recall memories of events that happened long, long ago.

Finally, the brain does not work like a computer; that much was already obvious to the pioneers of computing such as John von Neumann [14]. Neurones are far more complex than processors; they do not operate as simple logical gates, and may have an entirely different approach to processing.

As in the case of the other Big Science Projects, The Human Brain Project is misguided from the start by outmoded and discredited mechanistic science.

Nevertheless, a more modest ambition – involving correspondingly scaled-down funding - to make computers more like the brain may bear some fruit.

The human brain has an estimated memory of 3.5 x 1015 bytes, operates at a speed of 2.2 petaflops (1015 operations per second), is about 1.2 L in size, and consumes 20 W in power. In comparison, the world’s fastest supercomputer in June 2011 was Fujitsu’s K computer, with a memory of 30 x 1015 bytes, operating at a speed of 8.2 petaflops, is the size of a small warehouse, and consumes 12.6 MW [15]. In June 2012, K computer was ousted as the world’s fastest computer by the American IBM Sequoia, a Blue Gene/Q supercomputer performing at 16.325 petaflops using 123% more Central Processing Unit (CPU) processors and consumes only 7.9 MW, 37 % less than the K computer [16]. The race is clearly on for the world’s fastest, most power- efficient supercomputer. The huge amount of power consumed by current computers generates a great deal of heat that has to be carried away by bulky cooling systems that consumes yet more power. The human brain is a clear winner by far in terms of size and power consumption.

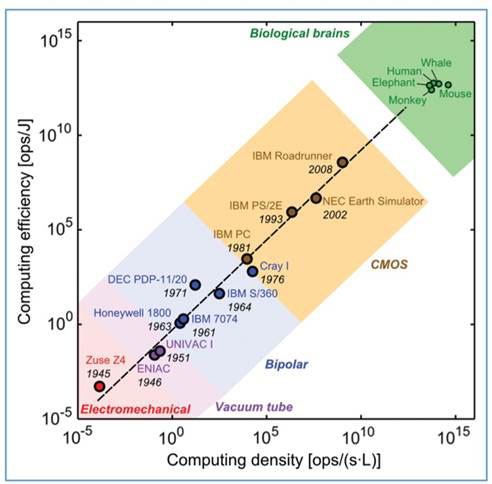

The computer has come a long way since the first room-sized electromechanical computer was demonstrated in the 1940s. Electronic transistors were introduced in 1960, and computers shrank to the size of personal computers around 1980. Since then, the density of transistors (operations/second/ L) has increased exponentially along with efficiency (operations/J) (Figure 1) [17]. The next goal is the Exaflop (1018 flops). But on current technology, that would consume hundreds of MWatts, and generate more heat, requiring even more cooling.

Figure 1 Computer efficiency versus computer density

The industry is hitting a new bottleneck. Transistors are packed about as densely as they can be on a 2D chip, and the need for data transport has made the problem worse, requiring more wires, more volume and more cooling. This is where the industry is turning to the human brain for inspiration.

The human brain accounts for just 2 % of the body’s volume and consumes 20 % of its total energy demand. But it is fantastically efficient. It can achieve five or six orders of magnitude more computing for each Joule of energy consumed (while also performing in parallel all the activities required for keeping the brain cells alive, which is part of the reason why some of us think organisms are quantum coherent [18] The Rainbow and the Worm, The Physics of Organisms, I-SIS publication).

In a typical computer, one-thousandth of 1 % of the volume is used for transistors and other logical devices, and as much as 96 % used for transporting heat, 1 % used for electrical communication (transporting information), and 1% for structural stability. In contrast, the brain uses only 10 % its volume for energy supply, thermal transport and structural stability; 70 % for communication and 20 % for computation. The brain’s memory and computational modules are positioned close together, so data stored long ago can be recalled in an instant.

The functional density in current computers is 10 000 transistors/mm3 compared with 100 000 neurons/mm3 (each neuron is considered equivalent to about 1 000 transistors). So computer functional density is 1010/L while brain is 1014/L).

A team at IBM Research Division in Zurich led by Bruno Michel took the cue from 3D architecture of the human brain to improve on volume reduction and power efficiency [17].

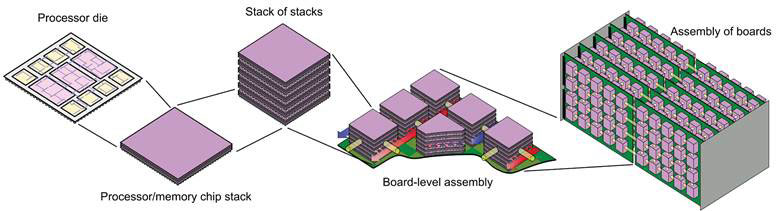

In some proposed 3D designs, stacks of individual microprocessor chips – on which the transistors could be wired in a branching network – are further stacked and interconnected on circuit boards, and these in turn are stacked together enabling vertical communication between them. The result is a kind of orderly fractal structure (Figure 2). The whole thing can be fluid cooled, and at some future date, power could even be delivered by energetic chemical compounds (as in the brain) with very little need for pumping.

Figure 2 Stacked chips in fractal structures for efficient volume reduction, communication and power efficiency

With volumetric cooled chip stacks, the overall volume is compressed by a factor of 105 to 106, resulting in the following volume fractions: transistors and devices 5 %, interconnects 25 %, power supply 30 %, thermal transport 30 %, structural stability 10 %.

If computing engineers aspire further to the operating speed of the Zetaflop (1021 operations per second), a brain-like structure will be necessary [19]. With today’s architectures, such a device would be larger than Mount Everest and consume more power than the current entire global demand.

Michel believes that such innovations should enable computers to reach the efficiency, if not the capability of the human brain by around 2060, a hundred years after the introduction of electronic computers.

Using the world’s current fastest supercomputer and the new scalable, ultra-low power computer architecture described above, IBM claims to have simulated 530 billion neurons and 100 trillion synapses, matching the number in the human brain. But they do not have a supercomputer that works anywhere near the way the human brain works.

The human brain can simultaneously gather thousands of sensory inputs; interpret them as a whole and react appropriately, abstracting, learning, planning and inventing, all on a budget of 20 W. A computer of comparable complexity using current technology would drain about 100 MW.

Part of the brain’s capacity for power saving is thought to be its being “event-driven”; i.e., individual neurons, synapses and axons only consume power as they are activated. (Actually this is probably not true, as the idle brain cell is still busy metabolizing to keep alive).

Neurons can receive input signals from up to ten thousand neighbouring neurons, elaborate the data, and then fire an output signal. About 80 % of neurons are excitatory the remaining 20 % are inhibitory. Synapses link different neurons, and it is here that learning and memory are believed to take place (but see above).

In the virtual brain, each synapse has an associated weight value that reflects the number of signals fired by virtual neurons that travel along them. When a large number of neurons-generated signals travel through the synapse, the weight value increases and the virtual brain begins to learn by association.

The computer algorithm periodically checks whether each neuron is firing a signal: if it is, the adjacent synapses will be notified, and they will update their weight values and interact with other neurons accordingly. Crucially, the algorithm will only expend CPU time on the very small fraction of synapses that actually need to be fired, rather than on all of them – saving massive amounts of time and energy.

Thus, the virtual brain, like the real brain, is event driven, distributed, highly power-conscious, and overcomes some of the limitations of standard computers.

IBM’s ultimate goal is to build a machine with human brain complexity in a comparably small volume and with power consumption down to ~1 kW. For now, the BlueGene/Q Sequoia supercomputer virtual brain uses 1 572 864 processor cores, 1.5 x 1015 bytes of memory, 6 291 456 threads, consumes some MWs, and is still the size of a warehouse.

Article first published 13/03/13

Comments are now closed for this article

There are 2 comments on this article.

Rory Short Comment left 16th March 2013 04:04:06

It seems to me that one of our problems as modern human beings is that we operate as though, the current paradigm within which we we interpret life is perfect, and as consequence there is no longer any space for mystery and the unknown, and perhaps unknowable, in our lives. To my way of thinking this is extremely arrogant and dangerous.

Todd Millions Comment left 19th March 2013 16:04:28

One wonders at the programing available for such devices-Surely as with the wetware version,the y2K used as an excuse mal and spyware prembedded-too be activated after the-"rieghtag fire" of 911,will surely cause cascades of data and routing conflicts.This has already resulted in considerable imparment too perceiving reality.Hal 9000 anyone?

It has being pointed out that we learn only by our errors(B.Fullers -'error left,correction error right correction,mean course of these sucessive errors-becoming true course.).Can an artificial intelligence or even comparitor-match,mimic or transcend this limit?Can in the end we ?